This code looks familiar? Most of us, at some point in time would have written javascript this way to do a simple login validation. Right?

The real problem comes when we try to write unit tests for this kind of javascript. The issue is the code which is completely mixed with HTML and inline event handlers.

So what? Big Deal. Why not write test cases to test HTML dependencies and DOM manipulations?

Yeah! You could. But you would end up writing ONLY test cases rather than writing actual functions.

How about we refactor the code so that it's testable?

Jasmine Specs to the rescue

Jasmine - An automated test framework for Javascript.

Say you have a function which returns the sum of two numbers

'expect' is equivalent to 'assert' and 'toEqual' is the matcher.

Let's spice this up a little bit. Say, we validate the input params (num1 & num2) before returning the sum

Now, what if the validateParams function fails when testing the sum function? We are not bothered about the functionality of the validateParams function when testing the sum function right? All we need is the function to return true/false based on then input so that the sum function can go ahead and do its job. So why not mock the function validateParams?

Jasmine provides a way to spy on functions. No matter how the validateParams function works, we would still be able to test the sum function.

This way, its easier to fake ajax calls, spy on library functions. I have put together a simple login app with bare minimum javascript. It's integrated with maven and the specs can be run as a part of your build without having to make use of the browser.

Jasmine, through the jasmine-jquery plugin also lets you use json fixtures to test functions which take inputs.

getJSONFixture(*.json) - loads the json data and makes it available for your specs. By default, fixtures are loaded from this location -

spec/javascripts/fixtures/json.

Jasmine has a lot more to offer, with support to fake events, fake timers etc. Do read the documentation.

Steps:

1. Clone the project from github.

2. Navigate to the path where you find pom.xml (pom.xml is configured with jasmine-maven-plugin and the saga-maven-plugin to generate coverage report)

3. Run "mvn clean test" to run the specs and "mvn clean verify" to run the specs & generate the coverage report.

4. Open the total-report.html and see the magic unfold in front your eyes ;-)

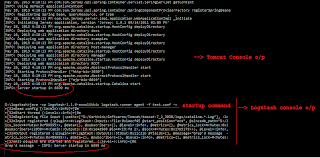

Jasmine specs run from the terminal

Coverage Report

To conclude :

Although, I ended up writing unit test cases for two whole modules, there was nothing conclusive about these tests. Yeah! Agreed that these tests help you test the flow. Which block of code gets called for what kind of input sort of way but that is all about it.

I had to do quite a bit of refactoring to make the already existing javascript functions testable.

Similar opinions expressed on this stackoverflow link as well.

Do let me know what you think.

PS: Title courtesy - This Post

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | function formSubmit(){ var userName = $("#userName").val(); var password = $("#password").val(); if(!isValid(userName,password)){ return false; } $.ajax({ type: 'POST', url: "login", dataType: 'text', data : "username="+userName+"&password="+password, success: function (data) { alert('Login Success'); }, error: function( xhr, err) { alert('Login Failed.'+ xhr.status); } }); } |

The real problem comes when we try to write unit tests for this kind of javascript. The issue is the code which is completely mixed with HTML and inline event handlers.

So what? Big Deal. Why not write test cases to test HTML dependencies and DOM manipulations?

Yeah! You could. But you would end up writing ONLY test cases rather than writing actual functions.

How about we refactor the code so that it's testable?

1 2 3 4 5 6 7 8 9 10 11 12 13 | function formSubmit() { var cred = readValuesFromUI(); //separate out the function which reads values from ui. var val = validate(cred); // separate out validations. if(val.isValid) { doLogin(cred); // ajax call to server } else { alert('Invalid Credentials.'); } } |

Jasmine Specs to the rescue

Jasmine - An automated test framework for Javascript.

Say you have a function which returns the sum of two numbers

1 2 3 4 5 6 7 8 9 10 | function sum(num1, num2){ return num1 + num2; } describe("this is a suite which tests the function sum with various inputs", function() { it("this is a spec which tests +ve scenario", function() { var s = sum(1,2); expect(s).toEqual(3); }); }); |

'expect' is equivalent to 'assert' and 'toEqual' is the matcher.

Let's spice this up a little bit. Say, we validate the input params (num1 & num2) before returning the sum

1 2 3 4 5 6 7 8 9 10 11 | function sum(num1, num2){ var isValid = validateParams(num1,num2); if(isValid) return num1+ num2; else return -1; } function validateParams(num1,num2) { // some logic } |

Now, what if the validateParams function fails when testing the sum function? We are not bothered about the functionality of the validateParams function when testing the sum function right? All we need is the function to return true/false based on then input so that the sum function can go ahead and do its job. So why not mock the function validateParams?

Jasmine provides a way to spy on functions. No matter how the validateParams function works, we would still be able to test the sum function.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | describe("this is a suite", function() { it("this is a spec where validateParams returns true", function() { var fakeValidateParams = jasmine.createSpy(); spyOn(window,'validateParams').andReturn(true); var s = sum(1,2); expect(s).toEqual(3); }); it("this is a spec where validateParams returns false", function() { var fakeValidateParams = jasmine.createSpy(); spyOn(window,'validateParams').andReturn(false); var s = sum(1,2); expect(s).toEqual(-1); }); }); |

This way, its easier to fake ajax calls, spy on library functions. I have put together a simple login app with bare minimum javascript. It's integrated with maven and the specs can be run as a part of your build without having to make use of the browser.

Jasmine, through the jasmine-jquery plugin also lets you use json fixtures to test functions which take inputs.

getJSONFixture(*.json) - loads the json data and makes it available for your specs. By default, fixtures are loaded from this location -

spec/javascripts/fixtures/json.

Jasmine has a lot more to offer, with support to fake events, fake timers etc. Do read the documentation.

Steps:

1. Clone the project from github.

2. Navigate to the path where you find pom.xml (pom.xml is configured with jasmine-maven-plugin and the saga-maven-plugin to generate coverage report)

3. Run "mvn clean test" to run the specs and "mvn clean verify" to run the specs & generate the coverage report.

4. Open the total-report.html and see the magic unfold in front your eyes ;-)

Jasmine specs run from the terminal

Coverage Report

To conclude :

Although, I ended up writing unit test cases for two whole modules, there was nothing conclusive about these tests. Yeah! Agreed that these tests help you test the flow. Which block of code gets called for what kind of input sort of way but that is all about it.

I had to do quite a bit of refactoring to make the already existing javascript functions testable.

Similar opinions expressed on this stackoverflow link as well.

Do let me know what you think.

PS: Title courtesy - This Post